|

||

|

||

| ||

No secret that any kind of communication means needs a greater bandwidth with time - growing computational capabilities must go along with strengthening of communicational ones. So, "To process something unnecessary one must first send something unnecessary". IntroductionThe concepts of communication and computation are so close that their tight connection is obvious even for PR departments of major IT companies. Quite often it makes no sense to separate these concepts. Today, speaking about growing power of computing devices we imply both growing performance of their processors and growing throughput of their communication channels. The communication channels include internal:

External wired communication channels are developing mainly in two directions - cost reduction and increase of availability of optical channels (top-down) and growth of throughput (bottum-up). However, the two physical carriers are not so close yet (first of all, in prices) to be involved in direct competition - in 90% of cases a character of a problem to be solved determines the technology to be preferred. Internal wired channels are switching over from specialized parallel interfaces to high-level serial packet interface (Serial ATA, 3GIO/PCI Express, Hyper Transport). It fosters a convergence of external and internal communication technologies: in future separate components of a computer case will be combined into a normal network. It's quite a logical solution - a modern chipset, thus, works as a network switch equipped with multiple interfaces such as a DDR memory bus or a processor bus and AGP/PCI. Wireless channels are just at the formation stage now in terms of the range of applications. Today they can be used effectively only for a small part of communication tasks, including the most important problem of developing a global network infrastructure. Wireless technologies are only partially suitable for local communications, first of all, because of a low throughput. At present, there are two prevailing wireless standards:

Cellular communication standards, including the struggling 3G, are the candidates for a wide territorial data transfer standard. But the widespreading 802.11 can make problems for the third generation of cellular networks as their niches overlap a lot. Now, when we have got an idea on the infrastructure, let's turn to the peripherals and internal communications. Having a too narrow bandwidth for printing, scanning and data exchange with wireless terminals, the BlueTooth is not a good choice as an internal wireless interface either. Let's pop into the computer case. There are still a lot of cables in there... What should we have ideally:

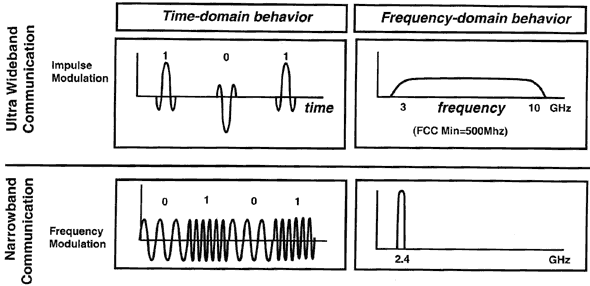

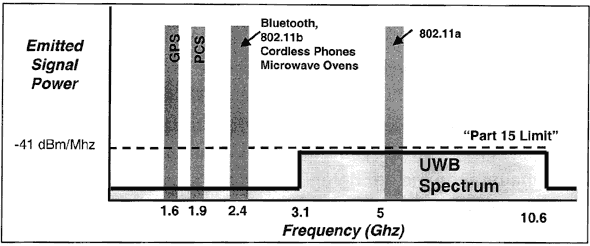

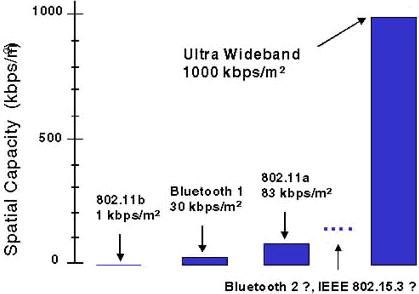

Ultra Wide BandThis concept doesn't stand for a definite standard of wireless communication (the standard is being developed now is still far from completion); this is a method of modulation and data transmission which can entirely change the wireless picture in the near future. Without further ado let's take a look at the diagram that demonstrates the basic principle of the UWB:  The UWB is above and the traditional modulation is below which is called here Narrow Band (NB), as opposed to the Ultra Wideband. On the left we can see a signal on the time axis and on the right there is its frequency spectrum, i.e. energy distribution in the frequency band. The most modern standards of data transmission are NB standards - all of them work within a quite narrow frequency band allowing for just small deviations from the base (or carrier) frequency. Below on the right you can see a spectral energy distribution of a typical 802.11b transmitter. It has a very narrow (80 MHz for one channel) dedicated spectral band with the reference frequency of 2.4 GHz. Within this narrow band the transmitter emits a considerable amount of energy necessary for the following reliable reception within the designed range of distance (100 m for the 802.11b). The range is strictly defined by FCC and other regulatory bodies and requires licensing. Data are encoded and transferred using the method of frequency modulation (control of deviation from the base frequency) within the described channel. Now take a look at the UWB - here the traditional approach is turned upside down. In the time space the transmitter emits short pulses of a special form which distributes all the energy of the pulse within the given, quite wide, spectral range (approximately from 3 GHz to 10 GHz). Data, in their turn, are encoded with polarity and mutual positions of pulses. With much total power delivered into the air and, therefore, a long distance of the reliable reception, the UWB signal doesn't exceed an extremely low value (much lower than that of the NB signals) in each given spectrum point (i.e. in each definite licensed frequency band). As a result, according to the respective FCC regulation, such signal becomes allowable although it also takes spectral parts used for other purposes:  So, the most part of energy of the UWB signal falls into the frequency range from 3.1 to 10.6 GHz, and the energy spectral density doesn't exceed the limit determined by the Part 15 of the FCC Regulations (-41dBm/MHz). Below 3.1 GHz the signal almost disappears, its level is lower than -60. The more ideal the form of a pulse formed with the transmitter, the less the energy goes out of the main range. But however that may be, the permissible deviation of the pulse from the ideal form must be limited, hence the second purport. The spectral range lower than 3.1 GHz is avoided not to create problems for GPS systems whose accuracy of operation can suffer a lot from outside signals even if their density is lower than -41. That is why 20 dBm (up to -60) were reserved in addition at the spectral range up to 3.1 GHz; it is not obligatory but it seems to be welcomed by military bodies. So, we managed to take a marginal part of the spectrum without breaking the current rules. The total energy of the transmitter which can fit into this band is defined by the area of the spectral characteristic (see filled zones on the previous picture). In case of the UWB it's much greater compared to the traditional NB signals such as 802.11b or 802.11a. So, with the UWB we can send data for longer distances, or send more data, especially if there are a lot of simultaneously working devices located close to each other. Here is a diagram with the designed maximum density of data transferred per square meter:  Density of transferred data able to coexist on the same square meter is much higher for the UWB compared to the popular NB standards. That is, it will be possible to use the UWB for the intrasystem communication or even for an interchip communication within one device! The UWB actually tries to solve the problem of inefficient spectrum utilization, like the Hyper Threading solves the problem of idle time of functional processor units. Frequency bands dedicated for different services remain unused for the most part of time - even in a very dense city environment - at each given point of time the most part of the spectrum is not used, that is why the radio spectrum is used irrationally:

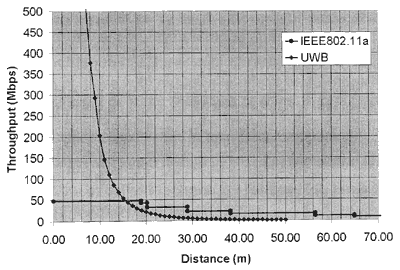

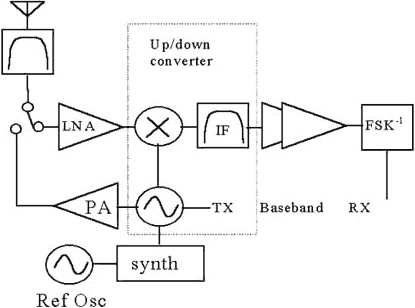

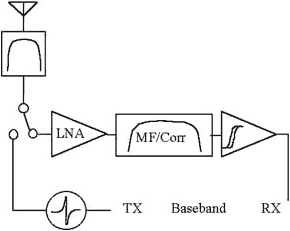

Whatever direction we are looking into nothing seems to be good - so, it's high time to start improving methods of radio communication and division of the air.In case of the NB a frequency and width of the dedicated spectral range for the most part (though the real situation is much more complicated) defines a bandwidth of the channel, and the transmitter's power defines a distance range. But in the UWB these two concepts interwine and we can distribute our capabilities between the distance range and bandwidth. Thus, at small distances, for example, in case of an interchip communication, we can get huge throughput levels without increasing the total transferred power and without cluttering up the air, i.e. other devices are not impeded. Look at how the throughput of data transferred in the UWB modulation depends on distance:  While the traditional NB standard 802.11a uses an artificially created dependence of throughput on distance (a fixed set of bandwidths discretely switched over as the distance increases), the UWB realizes this dependence in a much more natural way. At short distances its throughput is so great that it makes our dreams on the interchip communication real, but at the longer distances the UWB loses to the NB standard. Why? On the one hand, a theoretical volume of the energy transferred, and therefore, the maximum amount of data, is higher. On the other hand, we must remember that in a real life information is always transferred in large excess. Beside the amount of energy, there is the design philosophy which also has an effect. For example, a character of modulation, i.e. how stably and losslessly it is received and detected by the receiver. Let's compare the classical:  ... and UWB transceivers:  The classical transceiver contains a reference oscillator (synth) which, as a rule, is stabilized with some reference crystal element (Ref Osc). Further, in case of reception this frequency is subtracted from the received signal, and in case of transmission it is added to the data transferred. For the UWB the transmitter looks very unsophisticated - we just form a pulse of a required shape and send it to the antenna. In case of reception we amplify the signal, pump it through the band filter which selects our working spectrum range and... that's all - here is our ready pulse. The only problem is how to detect it! Here is the key how to increase the effective distance for the UWB. Of course, it's much more difficult to detect a single pulse than a series of oscillations of the carrier frequency. So, for the UWB to succeed we must create not only keys (pulse oscillators) of a strictly defined form and a switch time of around 3 GHz, but also develop high-quality detectors of such pulses which is a loads more complicated problem. However that may be but the UWB is much simpler than NB transceivers and can be entirely assembled on a chip. The most important advantage is that a UWB transmitter needs no its analog part - a signal can be sent to the air right from the chip, and in case of reception this analog part is much simpler and can be realized within the frames of not only hybrid technologies but also base ones, i.e. CMOS and the like. One more interesting aspect of the UWB comes from radio location (where wideband technologies were most often used before): a potential possibility to create networks able to define geometrical positions of objects. It requires sets (grids) of antennae which are very easy to make for the UWB. It can be very useful for addressing objects - just imagine a universal control radio console which knows which device it is aimed at at the given moment. One more application is creation of a dynamic antenna directivity diagram to improve reception of signals from a definite device, ignoring signals from others. This approach is going to improve even more the spatial effectiveness of the air utilization. The first standards and products based on the UWB will be available in 2005. And finally, here is a comparison table of the characteristics:

Alexander Medvedev (unclesam@ixbt.com)

Write a comment below. No registration needed!

|

Platform · Video · Multimedia · Mobile · Other || About us & Privacy policy · Twitter · Facebook Copyright © Byrds Research & Publishing, Ltd., 1997–2011. All rights reserved. |